Abstract¶

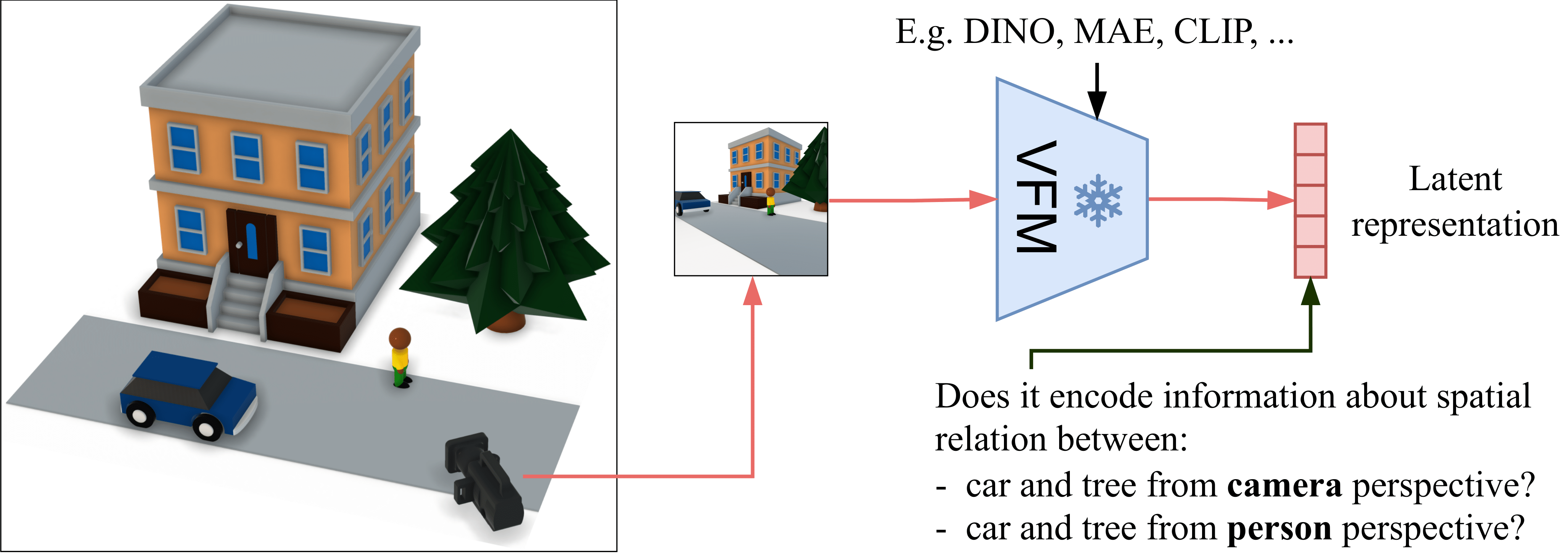

Visual Foundation Models (VFMs), such as DINO and CLIP, exhibit strong semantic understanding but show limited spatial reasoning capabilities, which limits their applicability to embodied systems. Recent work incorporates 3D tasks (such as depth estimation) into VFM training. However, VFM performance remains inconsistent across different tasks, raising the question: do these models truly have spatial awareness or overfit to specific 3D objectives?

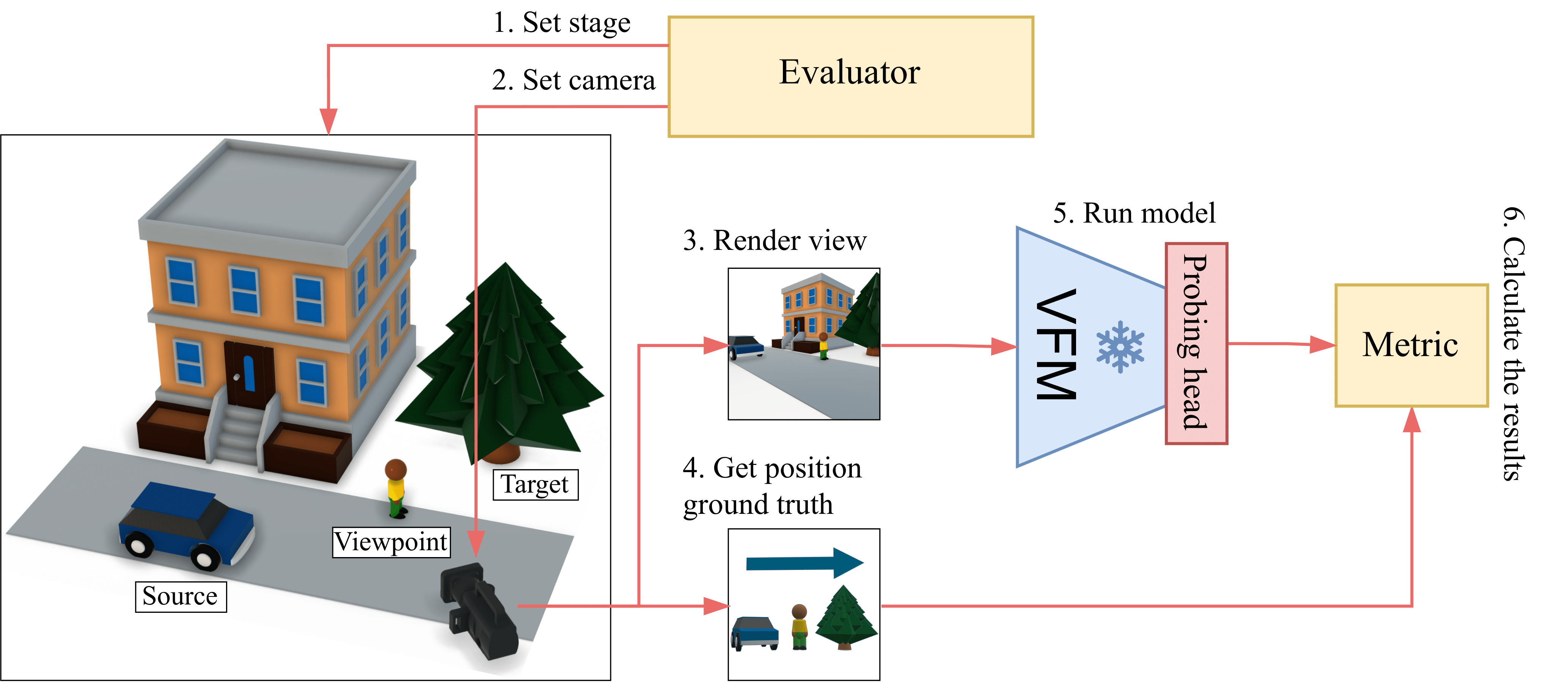

To address this question, we introduce the Spatial Relation Recognition Task (SpaRRTa) benchmark, which evaluates the representations of relative positions of objects across different viewpoints. SpaRRTa can generate an arbitrary number of photorealistic images with diverse scenes and fully controllable object arrangements, along with freely accessible spatial annotations.

Key Statistics¶

Key Findings¶

Main Results

-

Spatial information is patch-level: Spatial relations are primarily encoded at the patch level and largely obscured by global pooling

-

3D supervision enriches patch features: VGGT (3D-supervised) shows improvements only with selective probing, not linear probing

-

Allocentric reasoning is challenging: All models struggle with perspective-taking tasks compared to egocentric variants

-

Environment complexity matters: Performance degrades significantly in cluttered environments like City scenes

Environments¶

Evaluation Pipeline¶

Authors¶

Affiliations¶

Citation¶

If you find SpaRRTa useful in your research, please cite our paper:

@article{kargin2025sparrta,

title={SpaRRTa: A Synthetic Benchmark for Evaluating Spatial Intelligence in Visual Foundation Models},

author={Kargin, Turhan Can and Jasiński, Wojciech and Pardyl, Adam and Zieliński, Bartosz and Przewięźlikowski, Marcin},

journal={arXiv preprint arXiv:XXXX.XXXXX},

year={2025}

}Acknowledgments¶

This work was supported by the Polish National Science Center and conducted at the Faculty of Mathematics and Computer Science, Jagiellonian University.